Building a data science project from the ground up

A data science project aims to answer a specific question. Often we want to predict a specific characteristic (value) of an observation, before the actual value can be obtained. Here’s how we approach this.

A clear goal

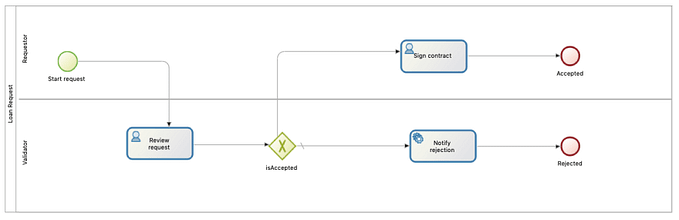

For each question for which we want a prediction, we implement a dedicated project. Here at Bonitasoft we decided to work on the prediction of whether the time constraint of a service level agreement (SLA) will be met. Many projects face this type of time constraint, that is, of a specific time limit in which to complete a process. Whether for legal constraints or for business efficiency, it is crucial to make sure that processes are executed in a given time-frame.

How to build a data science project

All data science projects share the same high-level framework. One can describe this frame work as like building a rocket with several stages, one on top of the other. At Bonitasoft we like this analogy, because we like the idea that the application of data science to BPM is a bit like “rocket science.” This kind of project requires a team of people with technical, business and data analysis skills.

If the team wants to leverage data to gain information and ultimately knowledge on the system, it must have a fair amount of data and understand them deeply. Basic understanding of the data is not enough!

Gathering the data

Most systems nowadays generate huge amount of logs, events, database records, and sometimes even noSQL documents. It is only a matter of having developers extract them and provide them to the data scientist. Is it really that easy? Yes and no. Having a lot of information is good, and extracting data from a system is (often) pretty straightforward. But the data arenot always readable or usable as is. Data need to be “explained,” interpreted, or translated so that a data scientist can use them.

Cleaning the data

Once the team has the data, it tries to identify missing or invalid values. Inconsistent data must be ignored in further analysis. This is what we call cleaning the data.

Exploring the data

The team can now visualize data aggregated on dashboards with graphs or tables. Such a view of the dataset allows team members to get a better understanding of the execution of the system and the business. From those graphs the team (mainly data scientists and business people) can identify trends or correlations between events and values observed.

As a result the data scientist is able to provide the team with guidance on the Machine Learning techniques that are potentially applicable to their situation. This guidance is often the result of hypothesis and guesswork based on trends and patterns spotted in the data regarding the goal of the project.

Building a Machine Learning (ML) model

Next the developers implement Machine Learning techniques, leveraging the data previously cleaned and explored. They build an ML model, a kind of mathematical formula applied to the data considered to be meaningful from the dataset. After comparing the various techniques efficiency on the dataset, the team can decide which one gives the best results. Unfortunately there is no unique solution that is best for all datasets. The team has to experiment with several until they find a satisfying solution.

When they have found a good model, the team can start making predictions.

At Bonitasoft we are in the process of implementing a new module that will predict if a process will respect its SLA or not, and will then let the process users react to this prediction to correct the situation if necessary.

Stay tuned, for more articles on Data Science applied to BPM!

This story by Olan Anesini and Nicolas Chabanoles was originally published on towardsdatascience.com on June 1, 2018.